Models

You have two main options when it comes to LLMs:

- Using cloud models

- Running models locally

Currently, it isn’t feasible or at least rather costly to run full size models locally. There is a variety of quantised and/or distilled local models available, including those fine-tuned for code generation, but you can expect them to have reduced capabilitiy compared to cloud models.

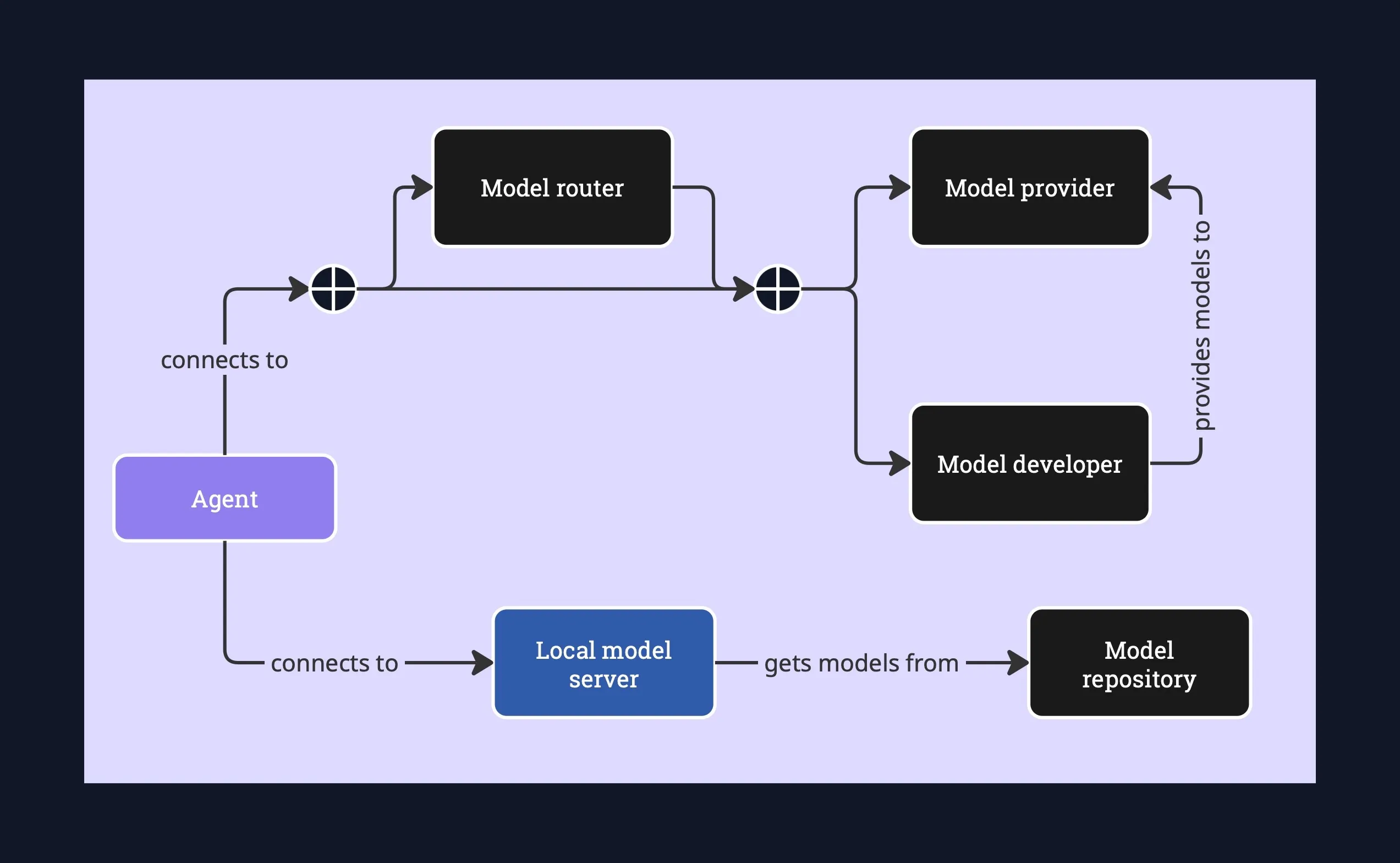

When it comes to cloud models, model developers can make them available on their own infrastructure, and they can additionally supply the models to model providers to run on their infrastructure. Model providers also provide the ability to fine-tune models for specific use cases.

Additionally, you can put a model proxy in front of the model developer/model provider. This gives you the advantages of easy model switching, a single bill, and other org-level features such as budgets, team spend attribution, and rate limiting.

To use a local model, you need to run a local model server equipped with a model downloaded from a model repository.

Below you’ll find the major providers of cloud models and repositories of local models. I’ve also listed a couple of proxy/router tools which help manage access to models.

Model developers

Alibaba

Alibaba has developed a family of models called Qwen

Anthropic

Anthropic develops the Claude models. It was founded by former OpenAI employees and has received large amounts of investment from Amazon.

DeepSeek

DeepSeek develops eponymous models, including DeepSeek-R1 which is a reasoning model.

ElevenLabs

ElevenLabs specialises in speech, developing models for text-to-speech, speech-to-text and voice cloning.

Google’s department DeepMind develops the Gemini and Gemma models, as well as Imagen, Lyria and Veo for image, music and video generation.

Meta

Meta develops the Llama models, with Llama 4 Scout theoretically supporting up to 10M tokens, more than any other model.

Mistral

Mistral develops models such as Mistral, Mixtral, and Magistral (a reasoning model).

OpenAI

OpenAI is the developer of ChatGPT and the GPT family of models. It has also developed DALL-E for text-to-image generation, Sora for text-to-video generation, and Whisper for speech recognition and transcription. It has received significant investment from Microsoft.

Phind

Phind is an “AI search engine”. While it can generate code, it cannot be integrated with agents like Cursor. Also has an image generation/editing tool.

Perplexity

Perplexity provides several models based on foundation models from other providers (it doesn’t have its own foundation models).

Stability AI

Stability AI has developed Stable Diffusion, a model for text-to-image generation and image modification. It also extended the model into the domains of audio, video and 3D model generation.

xAI

xAI develops the Grok family of models.

z.ai

z.ai develops the GLM family of models.

Model providers

Ollama is a new entrant into the model provider space, allowing users to run a small number of open weight models on its infrastructure.

Replicate hosts a variety of text, image and video models. Together.ai hosts open weight models.

Big Tech also provides model hosting: Vertex AI is part of Google Cloud, Azure AI is the Azure analog, and Amazon Bedrock is the AWS analog.

Model hubs for local LLMs

Hugging Face is the major repository of models. Ollama and LMStudio also provide a library of models available for download.

Model routers

LiteLLM

LiteLLM is an LLM proxy which is useful in an organisation context. It provides things like spend attribution, budgets, and rate limiting.

Open Router

OpenRouter provides a unified API and a convenient way to use different models via a single account.